21 min read | 6104 words | 223 views | 0 comments

There is an old adage in IT that can be commonly summed up as "It's always DNS". This both pokes fun at and draws attention to the subtle ways that DNS can be responsible for various, seemingly unrelated, issues in networked IT systems.

While every IT professional has heard this aphorism, less often do we question the veracity of it. Periodically, I find it worth asking, is it really always DNS? Or even most of the time? And more often than not, the gremlin hiding in my systems is not DNS, but something considerably more difficult to orchestrate perfectly.

It's TLS. It's always TLS.

What follows is not a diatribe about why DNS never causes problems; certainly, I will grant that it does, and even has occasionally, to me. But I would also argue that perhaps the subject of this oft-quoted aphorism may be in need of revision. At the very least, I will lay out an argument for why this may be the case, and review some of the commonly overlooked pitfalls with TLS that can break your environment, and your embedded devices in particular.

Background

Today, DNS is ubiquitous, and TLS is almost as ubiquitous, but it wasn't always that way. DNS is a much older technology than TLS, and thus its age and ubiquity perhaps has something to do with its entrenchment in the saying. For brevity, I am not going to provide a history of either technology, but DNS has been around since the early 1980s, while SSL, the predecessor to TLS, really only dates to the mid-1990s. Even then, the early days of SSL were fraught, as longstanding export controls still forbade international distribution of cryptographic technologies such as encryption, something challenged by the availability of PGP in the early 1990s. Still, it took until the end of decade for majorly restrictive regulations controlling the use of cryptography to relax to the point that "cryptography for the masses" really became viable in earnest.

For this reason, in many ways, DNS is a significantly more mature technology than TLS is. It's been around for more than 40 years now, and while DNS technologies continue to evolve (such as with DNSSEC), in many respects "it just works". TLS, on the other hand, still feels somewhat nascent in ways that are more befitting of significantly more novel technologies. Here, we'll consider some examples of ways in which TLS continues to be a complicated pain to deal with.

Certificate Authorities

One of the problems that technologies like TLS need to solve is determining if a remote entity should be trusted. SSH solves this by warning you the first time you connect to a new host, and TLS solves this using certificate authorities (CAs, hereafter). CAs are far from perfect in their own right — just read any one of the many stories about fraudulently issued certificates by commonly-trusted CAs and it's clear that the CA ecosystem is grappling with its own problems. The integrity of CAs aside, the inherent existence of CAs creates some challenges that IT people need to deal with on a regular basis, making TLS a constant maintenance burden and source of breakage in various systems that is not always easy to detect. I'll come back to this point a few times.

First, security evolves over time. Cryptographic evolves. TLS itself evolves. In just under 30 years, we've gone from SSL 2.0 to SSL 3.0, to TLS 1.0, 1.1, 1.2, and now 1.3. Many changes in TLS are not always backwards-compatible (and often rightfully so, in the case of security vulnerabilities). The relatively fast pace of change with TLS means that the TLS protocol itself sees updates over time in ways that can be problematic for compatibility.

Embedded Devices

In this post, I'll be focusing on embedded devices. These aren't servers or PCs, they generally don't have much RAM or compute power, but they typically do plug into the network with an Ethernet cable, just like everything else. And, by and large, most of these devices today support TLS, to some (potentially crippled) extent. I deal with a lot of telecom-related networked equipment, such as IP phones, analog telephone adapters (ATAs), and voice gateways, and these universally run some proprietary firmware released by a vendor, which can be upgraded to a point, in the best case, until the vendor stops supporting it. Whatever TLS support these devices have is dictated by whatever support and flexibility is built in to the firmware. Many of these devices allow you to use self-signed certificates, for example, but you'll need to load the CA root certificate into the device in order for it to be trusted, the same way your browser won't trust a certificate unless the CA is in its (or the operating system's) trust store.

Complicating things further, many of these embedded devices have client certificates. In theory, client certificates can be used by any TLS client, but in practice, most people do not make use of this technology — your browser could identify itself to a remote server using a certificate, but outside of some corporate intranet systems, you generally don't see this very much. However, for embedded devices that need to phone home constantly to provision themselves, often in a zero-touch or lite-touch manner, this is useful as it allows the device to "prove" to the server that it really is who it says it is. For example, when I'm provisioning an ATA or IP phone with some SIP accounts to connect to an IP PBX, like Asterisk, I don't want just any device to ping my provisioning server and ask for the configuration for any device it wants.

With this background, we can then ask, why is it always TLS? Well, here's why.

Why TLS Hates Your Legacy Embedded Devices

TLS 1.0 or Bust

Vendors typically lag behind in their support for modern encryption standards. By the time a device gets to market, its TLS support is already behind the times, and by the time the device has aged 5 or 10 years, it feels positively ancient by current cryptographic standards. It is not uncommon for many of these devices to support TLS protocols no newer than TLS 1.0. In a world where TLS 1.0 is considered deprecated and insecure, and many systems are designed to only support TLS 1.2 at minimum by default, this generally means extra hoops to jump through.

In practice, this is not a serious constraint, since it's easy enough to tweak servers (such as an Apache web server running on a Linux system) to allow TLS 1.0 if the support is needed. However, it's something to be aware of. Never assume that all your embedded devices support TLS 1.2. Most of them probably don't.

SHA1 or Bust

Second, we have the hash algorithms used in TLS. In particular, I am going to talk about SHA1, since that is usually what causes an issue. SHA1 is no longer considered to be a cryptographically secure hash algorithm, and hasn't been in some time. Nonetheless, many embedded devices only support SHA1 certificates, and don't support SHA256 or other more modern replacements for it.

Spotty Support for Free Certificate Authorities

Certificate authority support itself varies. Remember how we said that clients have to trust a set of root CAs? Every certificate a client verifies has to ultimately be traced to one of these trusted root CAs. However, not all clients agree on what CAs are trusted! Recall that operating systems and browsers have to literally maintain a set of root certificates locally, and as the set of root certificates trusted by that platform evolves, updates to the root certificates may be required.

Like many people these days, I make use of free certificate authorities like Let's Encrypt, which have done a lot to increase adoption of TLS by issuing the certificates themselves for free. However, like most free things, there is always a catch. There are some more obvious ones, like the validity period of the certificate, but a somewhat less obvious reason to potentially prefer to still purchase a commercial certificate from a legacy CA is compatibility. Case in point, not all embedded devices trust Let's Encrypt certificates — or rather, they don't trust the Let's Encrypt CA! Effectively, all Let's Encrypt certificates are then treated as self-signed.

Fortunately, you generally have the ability to manually load root certificates in, but you will need to do this if the CA you are using isn't among the root certs that happen to be on the device.

Server Name Indication

Depending on your deployment, virtual hosting may not be compatible with all embedded devices. While clients specify the hostname of the webpage they are requesting in the Host header, and this is fairly universally supported, over secure connections, the server also needs to present the proper certificate during the TLS handshake, which is before the Host header has been received. This is where Server Name Indication, or SNI, comes into play.

Unfortunately, believe it or not, SNI is not universally supported. This means if you want to have example.com and example.net hosted on the same machine, you need to have two IP addresses, one for each hostname, in order to ensure compatibility. With a larger number of hostnames, this quickly becomes infeasible. The typical way of dealing with this is to use a single hostname but use different ports for each service, since the port is a Layer 4 construct, and part of the TCP packet itself. In the example above, this would mean you might have example.com:443, but you might also have example.com:444 and example.com:445, etc. all serving encrypted (HTTPS) traffic for provisioning various kinds of devices. For example, port 443 might be for Vendor A's devices, port 444 for Vendor B's, port 445 for Vendor C's, etc.

Any one of these factors alone can be hurdle in ensuring that things are running smoothly. In practice, very often many of these things are true simultaneously, and when you have hundreds or thousands of these devices pinging your servers, each with their own idiosyncrasies and deficiencies, TLS can become a complete management nightmare.

There is one thing I haven't addressed yet. I mentioned that not all CAs are universally trusted, and you may need to explicitly provision a device to trust your CA. Well, if it doesn't trust your CA, how can you provision it? It's sort of a chicken-and-egg problem. The workaround is to "bootstrap" the device using HTTP, i.e. using no encryption, initially. In fact, this is what I do when provisioning any embedded device. They are all directed initially to the provisioning server on port 80 (using DHCP options, BOOTP, or similar ways of mass-provisioning devices), and depending on the type of device and what configuration formats the vendor has programmed it to look for, the device picks up an initial config that "bootstraps" it with the bare minimum configuration needed to successfully negotiate TLS. This might include things such as loading a custom CA certificate, if required. Secrets, e.g. SIP credentials, are never handed out at this point. The device will be directed to the "real" provisioning server, on some HTTPS port, where it will then pick up more specific and detailed configuration.

Mutual TLS

How does the server know which configuration to hand out? After all, even if it's a device from Vendor A, it doesn't know if it's Alice or Bob's device. This is where Mutual TLS (or MTLS) comes into play. MTLS makes use of client certificates on devices in the field, which are presented to the server as part of the handshake. This is "mutual", because normally clients always verify the certificate presented by the server, but in this case, the server also checks the certificate presented to it by the client. This is not used for setting up a secure channel, but rather for being able to verify the authenticity of a particular device. It's common for the MAC address of the device to be used as a unique identifier, and included in the client certificate.

The Polycom Phones That Mysteriously Stopped Provisioning

This brings me to a recent problem that I was having with my infrastructure. At home, I have a fleet of Polycom SoundPoint IP phones, which I had salvaged some time ago from a former employer of mine. I don't actually use IP phones very much, I generally stick to standard analog phones like 2500 sets or 2564 1A2 key phones, but I also do a lot of development with both analog and IP telephony, including IP phones. Apart from using them for testing and R&D purposes, it can also be convenient to display certain information on the phone screens — I personally show the latest weather forecast, so with a quick glance to one side of my desk, I can know what I need to know about the weather for the foreseeable future.

One thing I decided to do when setting my IP phones up, several years ago now, was to have the display backlight vary by time of day. I mostly use SoundPoint 550s, which have an adjustable backlight. Thus, I have the backlights turn on in the morning and turn off at nighttime, after I've gone to bed. This is mainly to reduce the "light pollution" at night (though I also like to think it might in some way prolong the longevity of the screens).

A couple weeks ago, I noticed that that some of my phone backlights were off, even during the day, meaning I couldn't see anything on the screen without first picking up the handset or hitting a button to temporarily brighten the screen. This was clearly wrong, since the backlights are supposed to be on. Eventually, I had noticed this enough where it was clear that something was broken that I needed to look into.

It was pretty obvious that this was a provisioning issue. You see, you can't tell a Polycom phone to turn on its backlight in the morning and turn it off at night, you can only tell it to turn it on or off. I provision all of my IP phones hourly, as with most things, and the provisioning server has logic to turn the backlight on during daytime hours and turn it off at night. What had happened here was that the phone had provisioned in the middle of the night, probably several weeks ago, and then been "stuck" with that configuration, having never been able to successfully provision itself again. The question, now, was why?

Logging into the phone's web portal (as most of these embedded devices have some kind of web portal for quick management or configuration if needed), I checked the realtime logs for the device and saw the following:

0112162602|copy |4|03|SSL_connect error SSL connect error.error:14094415:SSL routines:SSL3_READ_BYTES:sslv3 alert certificate expired

Hmm, well that was odd. I was pretty confident that my TLS certificate wasn't expired. I went ahead and checked and, sure enough, it didn't expire until 2032. I started poking around, seeing if anything else was amiss. Finding none, I decided to revisit the CA configuration, since for Polycom phones, I happened to be using a custom CA and self-signed certificates. The CA appeared to be in the phone, although it was a bit hard to tell, since Polycom's configuration syntax, like pretty much every embedded device vendor's, sucks. In the case of Polycom, it's not enough to load a CA in, you also have to control which parts of the device (e.g. provisioning, SIP, or the microbrowser (the embedded web browser)) are using which TLS profile, and then you need to configure which certificates are used by each profile, which is further complicated by the fact that "All" in Polycom-land often means "Everything, except these other things". Fortunately, that didn't appear to be the case here, and it seemed I had done everything correctly.

One of the first things to do when troubleshooting TLS-related issues is to look at a packet capture of the device. Since the server is not always the best place to do this, in case packets aren't making it to the server for some reason, and you can't do this on the client (generally speaking, packet captures aren't supported by these sorts of devices, and even if they are, it would be best to avoid them, as the chances of them being buggy are greater than you would think), the standard way of doing this is using a port mirror. In my case, I have all the Polycom phones connected to a PoE switch, and one port on the switch is setup as a port mirror connected to another server that has a second NIC. I can then connect to this server and run tshark in order to capture all the packets to and from the device — anything that traverses the switch interface. When it's done, I can then transfer it back to my desktop and open it in Wireshark.

Well packet captures are something you should do, they aren't a magic solution either. In this case, I saw the same error in the packet capture, but no further context as to the cause.

More Certificate Errors

They say that insanity is doing the same thing over and over again and expecting a different result. Well, having gone nearly insane, at some point I decided to try something different as I wasn't getting anywhere. I started by switching back from my self-signed certificate to the Let's Encrypt certificate in use on many of the other virtual hosts handing requests for other vendors' (non-Polycom) devices. I was pretty sure it hadn't worked, since I had remembered switching away from that at some point deliberately, but hadn't remembered exactly why (note to self: always take notes!).

The Let's Encrypt certificate didn't work, but did give me a different error:

0113085655|copy |4|03|SSL_connect error Peer certificate cannot be authenticated with known CA certificates.SSL certificate problem, verify that the CA cert is OK. Details: error:14090086:SSL routines:SSL3_GET_SERVER_CERTIFICATE:certificate verify failed

Remember what I said about Let's Encrypt not being supported by all devices? Well, that seemed likely to be what was going on here, so I started trying to solve that. Let's Encrypt has two root certificates at the moment, and so I tried loading the first root cert in, then the other. Neither worked? Both combined? Nope. Intermediary certs? Shouldn't be required. But no matter what I tried, I still kept getting the same error that the certificate couldn't be verified with known CA certificates.

At some point, I was talking to a friend of mine about this issue, and he suggested I point the phone at his server to see what would happen. Enter yet a third error:

0113175905|copy |4|03|SSL_connect error SSL connect error.error:1407742E:SSL routines:SSL23_GET_SERVER_HELLO:tlsv1 alert protocol version

In this case, his server didn't support TLS 1.0 anymore (remember what we said about that? And about not all clients supporting anything newer than it?), so this error was not entirely unexpected. Still, it didn't help at all.

At some point, I went ahead and generated a new CA and self-signed certificate to test with the Polycom phone under test:

openssl genrsa -out provision_root.key 4096 openssl req -x509 -new -nodes -key provision_root.key -sha256 -days 4096 -out provision_root.crt openssl genrsa -out provision_selfsigned.key 2048 openssl req -new -key provision_selfsigned.key -out provision_selfsigned.csr openssl x509 -req -in provision_selfsigned.csr -CA provision_root.crt -CAkey provision_root.key -CAcreateserial -out provision_selfsigned.crt -days 4096 -sha256

No luck. It did occur to me at some point that perhaps the full chain was required for the client to be able to successfully verify against the root CA, and so I manually created a full chain cert file:

cat provision_selfsigned.crt provision_root.crt > provision_fullchain.crt

Still no cigar. I then created another self-signed cert, this time using the ast_tls_cert wrapper script included with Asterisk:

contrib/scripts/ast_tls_cert -C provision.example.com -O "Provisioning" -d /tmp -o provision -e

I had recalled using this once before, when things were working, so maybe there was something I had overlooked. Things like that do happen, after all, especially with TLS. It has a lot of moving parts. But amazingly, I was still getting an error that made no sense:

0113210323|copy |4|03|SSL_connect error SSL connect error.error:14094415:SSL routines:SSL3_READ_BYTES:sslv3 alert certificate expired

Except I'd already established the certificate wasn't expired, and here I was, back at the beginning.

Revisiting the Client Certificate

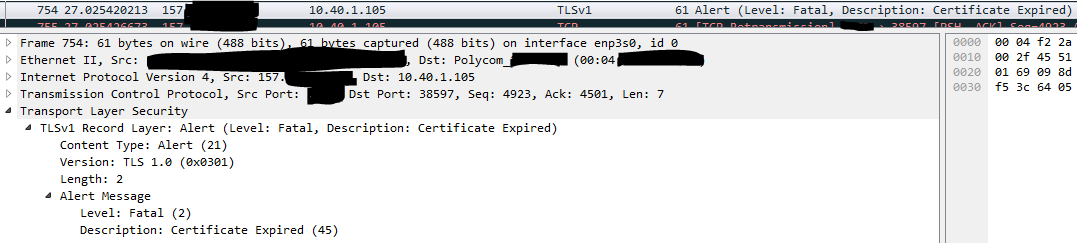

It was time for another packet capture. Something didn't make sense about this, and it was time to take a closer look. Notice anything odd here?

The error "Certificate Expired" is plainly clear. But notice that the packet is being sent from the server (which I've partially redacted) to the client (which has a private Class A IP address). That's right, the server is telling the client that "certificate is expired". Not the other way around, as I'd been assuming this whole time. But you know what they say about assumptions

Naturally, this changed things quite a bit. The server's certificate wasn't expired, and I knew it. But the server wouldn't complain to the client that its own certificate was expired so maybe the client's certificate was expired?

This didn't quite make sense to me, as the way I have Apache set up is to allow all TLS requests, without doing any validation of the certificate. All of this is handled by the provisioning application, which has its own custom (and hairy) logic to deal with the reality that is embedded device provisioning. If you want to request a client certificate in Apache but not verify it, you can specify SSLVerifyClient optional_no_ca, and that's what I had in all my provisioning virtual hosts (well, except the HTTP one on port 80).

That's when it occurred to me that I had changed the SSLCertificateFile and SSLCertificateKeyFile settings repeatedly, but I hadn't changed anything pertaining to MTLS. It didn't really make any sense, but I had tried everything else, why not try that?

So I changed SSLVerifyClient optional_no_ca to SSLVerifyClient none.

And just like that, it worked! Well, sort of. The phone completed TLS negotiation, which I had now been troubleshooting for days, but had not been working in weeks. But since I had disabled MTLS altogether, no client certificate was being sent in the request, and thus none of the information in the certificate was available to prove to the server that it was that particular IP phone so I could give it its particular configuration! I had won a battle, but I still hadn't won the war yet.

To compare, I reverted the setting, and sure enough, the negotiation issue came back. Okay, so the client certificate had probably expired. In fact, Polycom confirms on their website that the device expires 15 years after the date of manufacture. Okay, so the IP phone was 15 years old then probably. That didn't mean it was unusable. I was a little irritated with Polycom at this point for not using a longer-lived client certificate. Was this a subtle ploy to brick a bunch of IP phones to get people to throw out their unsupported devices and buy new, supported ones? Probably. But even if it was, it shouldn't have caused everything to break in the way that it had. An expired certificate should still be accepted by the web server, which I would then ignore in my application logic, right?

Right?

That's when I decided to do something that would never have occurred to me originally. I opened up the Apache web server bug tracker and did a quick search for "expire". Literally, that was all I searched for. And sure enough, on the first page of results:

BUG 60028: mod_ssl does not accept expired client certificates even with SSLVerifyClient optional_no_ca Changed 2016-08-22 mod_ssl does not accept expired client certificates even if the SSLVerifyClient directive is set to "optional_no_ca". Self-signed certificates are accepted, but expired certificates are not. IMHO this doesn't match the description in the official manual and is thus a bug: "optional_no_ca: the client may present a valid Certificate but it need not to be (successfully) verifiable"

Yup. I'd just been bitten by an eight and a half year old bug in the Apache web server. Ouch!

Well, what to do next? Amazingly, for what seemed like such a basic, yet important flaw in the TLS module of the world's most widely used web server had not been fixed, even though this had been known about for a decade. From experience, reporting a bug is helpful, but fixing the bug yourself is often the only way to resolve it. Nobody cares more deeply about squashing a bug than those that are affected by it.

However, after doing another search, it turned out that somebody else had, in fact, already written a patch for this very problem.

A new SSL directive SSLVerifyAcceptExpiredClient (on/off) would allow the SSL engine to accept a client certificate with an expired notAfter date. The motivation is to allow some client (old embedded, non upgradeable device) to still access a server. The attached patch build over httpd trunk 2.5 creates a new directive and corresponding flags in the server and directory configuration structures. The expiration error bypass is performed in ssl_callback_SSLVerify (ssl_engine_kernel.c)

Old embedded, non-upgradeable device. Sound familiar, anyone?

The patch seemed like it could work, but I never tested it. Fundamentally, I agreed with the reporter of bug 60028 that this was a bug, not an enhancement that should use a new setting. So that's why I went ahead and ultimately submitted my own patch to the project, so that this issue can be fixed properly for everyone. The patch hasn't been merged by the maintainers yet, and even then, it'll likely be some time before it makes it ways into the Debian packages, so I'll probably be compiling Apache from source from now on for the near future.

In retrospect, I feel almost lucky to have stumbled upon this when I did. Client certificates will expire eventually, and though I still fault Polycom for not using a longer validity, sooner or later, I would've had things stop working the way they did and eventually discover that Apache had this massive bug with its SSLVerifyClient setting. Either problem on its own would not have caused this issue, it required both to actually cause breakage.

Solution

With the patch above applied, I compiled the Apache web server and PHP from scratch. There is a bit of an art to this, as Apache needs to be compiled in a very specific way to match the configuration that the Debian packages use, as I didn't want to change any of the existing configuration. PHP also needs to be compiled with many additional options passed to configure, in order to ensure that all the stuff usually provided by the available PHP packages are available. On the upside, I now have a more modern version of both Apache and PHP than the packages would provide.

As expected, Apache no longer rejects requests from Polycoms with expired certificates. I actually did an inventory of mine, to check when they actually expired:

# echo -n polycom_000* | xargs -d' ' -I {} openssl x509 -enddate -noout -in {}

notAfter=Nov 15 00:12:50 2035 GMT

notAfter=Nov 15 00:17:52 2035 GMT

notAfter=Dec 21 04:36:33 2024 GMT

notAfter=Dec 21 04:49:06 2024 GMT

notAfter=Dec 21 09:05:32 2024 GMT

notAfter=Dec 21 09:13:12 2024 GMT

notAfter=Dec 21 18:54:38 2024 GMT

notAfter=May 23 06:36:52 2026 GMT

As you can see, 5 out of the 8 certificates expired in December 2024, which roughly lines up with when everything seemed to break, so that makes sense enough.

Of course, with Apache patched to only soft fail on seeing an expired cert, the value of $_SERVER['SSL_CLIENT_VERIFY'] is only GENEROUS, not SUCCESS. All this means is that a client cert was provided, but it wasn't validated against the configured CA or any other, for that matter. Thus, any client could provide any client certificate at all! This is the same as not doing MTLS to begin with, so we need to add our own additional logic to verify the cert.

In theory, I could have also patched Apache, perhaps with an additional option, to return SUCCESS instead of GENEROUS for a certificate that was expired, but otherwise good. For now, I'm doing it in the application, manually shelling out to the OpenSSL command line tool:

openssl verify -no_check_time -CAfile polycom_root_ca.crt -untrusted <(cat polycom_intermediate_*.pem) -cert $1

OpenSSL needs not just the root Polycom cert, but also the appropriate intermediate certs in order to verify them. Polycom publishes all of their certs online. Once you download them, you need to wrangle all of them from the binary DER format into the ASCII PEM format. For example, to do that to the needed intermediate certs:

# openssl x509 -inform der -in 'Polycom Equipment Issuing CA 1.crt' -out polycom_intermediate_eqp1.pem # openssl x509 -inform der -in 'Polycom Equipment Issuing CA 2.crt' -out polycom_intermediate_eqp2.pem # openssl x509 -inform der -in 'Polycom Equipment Policy CA.crt' -out polycom_intermediate_policy.pem

Then, you can go ahead and manually verify the certificate. Of course, a first attempt to do so will result in an error that the certificate has expired.

# openssl verify -CAfile /etc/ssl/private/polycom_root_ca.crt -untrusted <(cat polycom_intermediate_*.pem) -cert polycom_MAC.crt O = Polycom Inc., CN = MAC error 10 at 0 depth lookup: certificate has expired error polycom_MAC.crt: verification failed

Well, no duh, that's why we're doing this workaround in the first place. Simply ignore the certificate expiration and repeat:

#openssl verify -no_check_time -CAfile /etc/ssl/private/polycom_root_ca.crt -untrusted <(cat polycom_intermediate_*.pem) -cert polycom_MAC.crt polycom_MAC.crt: OK

That's it! You can now put the verification logic in a shell script that you can call from your provisioning application. The verify command returns 0 on a successful verification and nonzero (typically 2) if it fails to verify.

I discovered in testing this process that some of my Polycoms additionally failed to verify this way. Instead of OK, I got error 18 at 0 depth lookup: self-signed certificate. While most Polycom devices have a certificate issued by the Polycom CA, some do not. From "Device Certificates on Polycom Phones Feature Profile 37148":

Polycom UC Software devices support several types of device certificates. There is one ‘built-in’ certificate. In most cases this certificate will have been installed at the Polycom manufacturing facility. If however there is no factory installed certificate, the device will generate a ‘self signed certificate’ to be used as the ‘built-in’ certificate... There are two possible types of ‘built-in’ device certificates. They can be either a factory installed certificate (most common) or a self-signed certificate, which is created by the device itself if no factory installed certificate exists.

So, the two phones with a certificate expiring in 2035 (which are the self-signed ones) are, ironically, actually the oldest of the Polycom phones. And, even with the workaround for expired certificates, there isn't a solution for the self-signed certificates. The only remaining solution at this point would be to set up a custom PKI for Polycom phones and issue unique certificates to phones that never had a factory certificate, but setting up a PKI for Polycoms was something I was trying to avoid in the first place and is outside the scope of this article. As is often the case, there is no perfect solution here.

Overall, this was a somewhat convoluted issue to solve with several moving parts. I have spent probably fifteen to twenty hours investigating this and developing solutions over the past two months. There were actually two overarching issues - Polycom phones with an expired certificate that otherwise validate against the Polycom root CA, and Polycom phones with no factory-issued certificate at all and which aren't currently expired but don't validate against anything (arguably a worse issue to deal with).

It's always TLS, and when it is, well, it really is.

Conclusion

At this point, I anticipate one natural objection. Polycom may be wrong to issue client certificates that expire while the device is still functional, but a custom device cert can also be uploaded to the device (though not easily if it has already expired). Apache may be wrong to reject expired client certs when it shouldn't be validating them, but that bug can be fixed. But, you may ask, am I not wrong to be using end-of-life devices with their now-degraded TLS support in my environment, and is this experience not my own punishment for doing so?

To some extent, I think it would be reasonable to argue it is. At the same time, I would argue that it is not. We live in a mass consumer culture of waste and turnover, particularly in the IT world. I have 15 to 20 year old computers, and they have no such issues with TLS interoperability. Granted, I realize that a full desktop or server running Windows or Linux is hardly a just comparison to make against a locked-down embedded device running proprietary firmware. However, I do not believe mere TLS degradation should be allowed to forcibly obsolesce otherwise perfectly functional devices. The Polycom SoundPoint 550s are a good example of this. As far as IP phones go, I believe they are fine devices. The screen is nice, the device's capabilities are decent, and even when compared against Polycom's newer lines of IP phones, such as the VVX-series, I believe they are still perfectly adequate these days. Of course, there is the fact that they are no longer supported by Polycom. They are older devices, and as discussed, there are some challenges to be expected with TLS, especially as time goes on. But I want to be very clear that end of support is not the same as end of life. It is not up to vendors to dictate when we should throw our equipment in the scrap pile for recycling (or worse, the landfill). The world is already on a calamity course, and ewaste is something the world very much needs far less of than it has now.

I bring this up because, very often, it is TLS, more so than any other factor, that obsolesces embedded devices, such as the telecom appliances I have discussed here. I am not saying that this is always intentional or by design, but frankly, it doesn't matter whether it is or isn't. As IT professionals, I believe we have a responsibility to do our best to make sure that the technology we manage can serve out as much of their useful life as possible. And that means not letting that useful life be shortened by things as silly as device certificates expiring, or even a lack of support for TLS 1.2. It may not be ideal, but the world in which we live and in which these devices exist is far from that.

And that is the reality about TLS. It has such a tendency of its forcing its hairy tentacles into everything, especially given that cryptography is the foundation of modern networked systems. Embedded devices, in particular, can pose challenges when it comes to ongoing operations and maintenance. Unlike DNS, which can remain working as-is if unchanged, TLS involves systems constantly in flux: certificates, certificate authorities, devices, vendors, protocols, signature algorithms, etc. All this change can and does create breakage over time. My Polycoms were working fine a couple months ago, and then they stopped provisioning because of an 8-year old bug in the Apache web server. This is the imperfect reality of the TLS ecosystem.

I don't want to be dismissive of whopping DNS issues that people may have struggled with in the past (though I also wonder how many people have ever had to compile their own DNS server before in response to a DNS bug see what I mean?), but I hope I've made a case for why TLS can present equally, and often significantly more, complicated and difficult problems to solve in the 21st century. Certainly, this bias reflects my own experiences — I've never had to struggle much with DNS; in contrast, entire weeks of my life, and potentially even months, have been spent troubleshooting problems related to TLS, the incident with the Polycoms being just a small fraction of that. Even when everything seems stable, it's only a matter of time until something breaks — that's virtually by design with TLS.

And that is why it's always TLS.

Log in to leave a comment!