16 min read | 4530 words | 524 views | 0 comments

A couple weeks ago, I discovered that one of my Asterisk servers was occasionally getting into a state where no new channels could be created, due to having used all available file descriptors (1024, which is a lot). It was pretty clear that we were looking at a file descriptor leak here.

Normally, this wouldn't be a hard issue to debug, as Asterisk comes with a DEBUG_FD_LEAKS compiler option that will let you see all open file descriptors, supposedly, by running core show fd from the CLI. This is to allow developers to easily debug possible file descriptor leaks.

Well, that didn't help much this time. The file descriptors in question didn't show up in the output of core show fd at all. However, if I checked /proc/PID/fd, then sure enough, there were more than 1,000 file descriptors open by the Asterisk process. Clearly, something was not right.

Figuring out the general cause of the fd leak was actually pretty straightforward, thanks to tools like netstat and lsof - for example:

# netstat -ntup | grep "CLOSE_WAIT" tcp 64 0 REDACTED:52506 104.21.48.12:443 CLOSE_WAIT 16501/asterisk tcp 64 0 REDACTED:45834 172.67.175.181:443 CLOSE_WAIT 16501/asterisk # lsof -i -a -p 16501 | grep "CLOSE_WAIT" asterisk 16501 asterisk 72u IPv4 28873098 0t0 TCP REDACTED:52506->104.21.48.12:https (CLOSE_WAIT) asterisk 16501 asterisk 77u IPv4 28940733 0t0 TCP REDACTED:45834->172.67.175.181:https (CLOSE_WAIT)

CLOSE_WAIT is never a good sign. It basically means that we're done using the socket, but it hasn't been closed yet. Do this enough times, and you'll starve your process of file descriptors.

The obvious question to answer was: what's responsible for opening these sockets (as clearly, they are sockets), and not closing them?

The first clue was that the IP addresses involved on the remote side were always Cloudflare IP addresses on port 443. So, clearly some kind of HTTPS web traffic. Okay, that was helpful, but it could have been many things - cURL requests in the Asterisk dialplan (and any number of them) or in a module itself. That was the natural thing to think, at least.

I noticed that the leaks were somewhat "spurty" - I would often get a few in a minute, and then sometimes none for a while. They didn't seem to line up with call traffic necessarily, either, which was strange. I started using the watch tool to poll the commands above to show me how many CLOSE_WAIT connections there were, so I would know the second a new one showed up, but watch turned out to be insufficient for the task at hand. So after briefly testing a simple shell one-liner (while true; do date +"%T"; ls /proc/13879/fd/ | wc -w; sleep 2; done), I spent a minute writing a simple shell script that I could run on one monitor that would let me know visually (and more importantly, audibly, by ringing the terminal bell), when there was a new leak. Occasionally, CLOSE_WAIT sockets appear for a brief second and disappear, but there were actually relatively few false positives with this method:

#!/bin/sh

astpid=`cat /var/run/asterisk/asterisk.pid` # get the Asterisk PID from the run file

lastleaked=0

while true

do

date +"%T" | tr -d '\n' # Print out the current time, no newline

printf " - $astpid - " # Print the Asterisk PID, for convenience

leaked=`lsof -i -a -p $astpid | grep "CLOSE" | wc -l` # Print how many CLOSE_WAIT connections Asterisk has

echo $leaked

if [ "$lastleaked" != "$leaked" ]; then

printf "ALERT!!!!!!!!!!!!\n"

printf "\a" # Ring the bell to grab my attention

lsof -i -a -p $astpid | grep "CLOSE" # show the leaky sockets now

/sbin/asterisk -rx "core show fd" # show Asterisk's fd records now

fi

lastleaked=$leaked

sleep 2 # poll every 2 seconds

done

The script above is pretty straightforward: using a pipeline of commands that spits out how many CLOSE_WAIT socket connections the process owns, it will alert the user when a new CLOSE_WAIT connection shows up.

I initially thereafter expanded on the above to also automatically start strace and tshark as background jobs and kill them when a new leak occured, and though this worked, in trying to do fancier things, the complexity grew cumbersome, and I ended up reverting to the simple script above rather quickly.

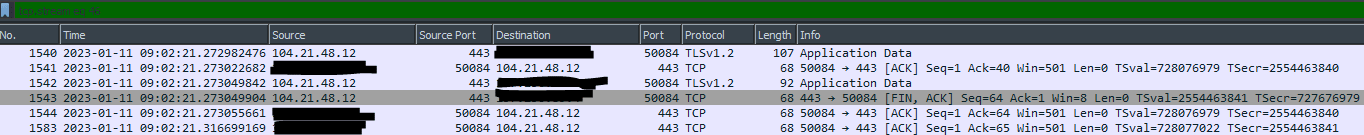

The next thing I did was take some packet captures using tshark on the server itself. This was where things started to get really interesting. Do you notice anything strange about this packet capture?

All the packet captures showed something like this, around the time that I would see new leaks. Frustraingly, there was no TLS handshake present to analyze. What seemed oddest to me was that the first packet was from the web server to my server. Usually, HTTPS requests are initiated by the client. It made no sense that a random HTTPS server would suddenly reach out to my server and start talking to it, and what was more, on random ports each time. TCP sockets don't work that way. Asterisk wouldn't be listening on such ports, so it seemed like I was missing something here, since Asterisk would almost certainly be calling connect() to initiate an outgoing connection.

The packet capture was interesting, but not enough - something was missing. I could see there was some HTTPS traffic, but why and how, the packet capture couldn't say.

It was time to turn to strace, the Linux utility that lets you monitor all system calls that a process makes. Anything socket-related was sure to show up here.

Except, it didn't turn out that way. I would run strace with strace -ffp PID -o /tmp/aststrace.txt for a little bit, and as soon as I was alerted to a leak by my monitoring script, I'd immediately stop the strace job I had running and then run lsof -i -a -p PID | grep "CLOSE" to get the file descriptor numbers that were leaked. From there, I'd just grep -R "(" in the directory with the strace logs.

Nothing. There were absolutely no records of the file descriptor in the strace logs. I grepped across all the files in the directory, and there were no matches. I thought it possible that perhaps my syntax was excluding something legitimate, but it seemed unlikely, just based on the format of the strace logs themselves.

This to me made no sense at all. strace logs *all* system calls that do I/O. If the Asterisk process was doing anything with this socket, which the kernel was saying it was, then strace would see it. Once again, this was incomplete, and I was coming up short somewhere. How could strace fail me?

It occured to me that it would be convenient to have strace log system calls by file descriptor number, rather than by thread ID. Unfortunately, no such capability exists in strace (well, short of firing up 1,024 strace commands in parallel, to each individually log only a particular file descriptor), so I would have to find another way.

I struggled with this a few times, until eventually I had the thought to run strace from when Asterisk started and just leave it running. I'd previously been periodically stopping strace and truncating the log files every few minutes, to avoid filling up the disk, since strace, if left running for a while, will generate a lot of logs (easily a GB in just a couple hours). I didn't have much free disk space available, so I naturally felt the urge to avoid filling the disk all the way up, as I'd done previously in the past more times than I care to admit.

Well, the time I left it running until the first leak, I finally got some output from strace. After parsing through the relevant output, it was clear that this was what I was looking for:

strace.10729-socket(AF_INET, SOCK_STREAM, IPPROTO_TCP) = 73

strace.10729:setsockopt(73, SOL_TCP, TCP_NODELAY, [1], 4) = 0

strace.10729:fcntl(73, F_GETFL) = 0x2 (flags O_RDWR)

strace.10729:fcntl(73, F_SETFL, O_RDWR|O_NONBLOCK) = 0

strace.10729:connect(73, {sa_family=AF_INET, sin_port=htons(443), sin_addr=inet_addr("104.21.48.12")}, 16) = -1 EINPROGRESS (Operation now in progress)

strace.10729-poll([{fd=73, events=POLLPRI|POLLOUT|POLLWRNORM}], 1, 0) = 0 (Timeout)

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLOUT}, {fd=71, events=POLLIN}], 2, 1) = 0 (Timeout)

strace.10729-rt_sigaction(SIGPIPE, NULL, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, 8) = 0

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLPRI|POLLOUT|POLLWRNORM}], 1, 0) = 0 (Timeout)

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLOUT}, {fd=71, events=POLLIN}], 2, 1) = 1 ([{fd=73, revents=POLLOUT}])

strace.10729-rt_sigaction(SIGPIPE, NULL, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, 8) = 0

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLPRI|POLLOUT|POLLWRNORM}], 1, 0) = 1 ([{fd=73, revents=POLLOUT|POLLWRNORM}])

strace.10729:getsockopt(73, SOL_SOCKET, SO_ERROR, [0], [4]) = 0

strace.10729:getpeername(73, {sa_family=AF_INET, sin_port=htons(443), sin_addr=inet_addr("104.21.48.12")}, [128->16]) = 0

strace.10729:getsockname(73, {sa_family=AF_INET, sin_port=htons(48720), sin_addr=inet_addr("REDACTED")}, [128->16]) = 0

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-openat(AT_FDCWD, "/etc/ssl/certs/ca-certificates.crt", O_RDONLY) = 74

strace.10729-fstat(74, {st_mode=S_IFREG|0644, st_size=198416, ...}) = 0

strace.10729-read(74, "-----BEGIN CERTIFICATE-----\nMIIH"..., 4096) = 4096

strace.10729-read(74, "8B1\nRXxlDPiyN8+sD8+Nb/kZ94/sHvJw"..., 4096) = 4096

strace.10729-read(74, "FoXDTMwMTIzMTE0MDgyNFowRDEL\nMAkG"..., 4096) = 4096

strace.10729-read(74, "jgdYZ6k/oI2peVKVuRF4fn9tBb6dNqcm"..., 4096) = 4096

strace.10729-read(74, "LBQAwPDEeMBwGA1UE\nAwwVQXRvcyBUcn"..., 4096) = 4096

strace.10729-read(74, "jl0qhqdNkNwnGjkCAwEAAaNFMEMwHQYD"..., 4096) = 4096

strace.10729-read(74, "BDGR8Dk5AM/1E9V/RBbuHLoL7ryWPNbc"..., 4096) = 4096

strace.10729-read(74, "+vZ\n5nbv0CO7O6l5s9UCKc2Jo5YPSjXn"..., 4096) = 4096

strace.10729-read(74, "vTwJaP+EmzzV1gsD41eeFPfR60/IvYcj"..., 4096) = 4096

strace.10729-read(74, "D7eTiew8EAMvE0Xy02i\nsx7QBlrd9pPP"..., 4096) = 4096

strace.10729-read(74, "EQMA4GA1UE\nBwwHU2FsZm9yZDEaMBgGA"..., 4096) = 4096

strace.10729-read(74, "YTAkRF\nMRUwEwYDVQQKDAxELVRydXN0I"..., 4096) = 4096

strace.10729-read(74, "swCQYDVQQGEwJVUzEVMBMGA1UEChMMRG"..., 4096) = 4096

strace.10729-read(74, "1MVYwnSlcUfIKdzXOS0xZKBgyMUNGPHg"..., 4096) = 4096

strace.10729-read(74, "GgPVs93rnD5/ZpKmbnJeFwMDF/k5hQpV"..., 4096) = 4096

strace.10729-read(74, "1QnDE6u/LDsg0qBIimAy4E5S2S+zw0JD"..., 4096) = 4096

strace.10729-read(74, "wFAYDVQQKEw1FbnRydXN0LCBJbmMuMTk"..., 4096) = 4096

strace.10729-read(74, "MB4XDTE0\nMTEyNjA1MTMxNVoXDTQwMTI"..., 4096) = 4096

strace.10729-read(74, "Z5049sJQ6fLjkZHAOkrprlOQcJ\nFspjs"..., 4096) = 4096

strace.10729-read(74, "ggEPADCCAQoCggEBAMwldpB5BngiFvXA"..., 4096) = 4096

strace.10729-read(74, "Jh\nwZG7SMA0j0GMS0zbaRL/UJScIINZc"..., 4096) = 4096

strace.10729-read(74, "ynqI\n4uJEvlz36hz1\n-----END CERTI"..., 4096) = 4096

strace.10729-read(74, "\nM4GwVhO+5lFc2JsKT0ucVlMC6U/2DWD"..., 4096) = 4096

strace.10729-read(74, "3DQEBAQUAA4ICDwAwggIKAoICAQCnUBn"..., 4096) = 4096

strace.10729-read(74, "UmQbC1TR0zvsQD\nyCV8wXDbO/QJLVQnS"..., 4096) = 4096

strace.10729-read(74, "ADCCAQoCggEBAOn4j/NjrdqG2KfgQvvP"..., 4096) = 4096

strace.10729-read(74, "WwgUm9vdCBHQSBDQTAeFw0w\nNTEyMTEx"..., 4096) = 4096

strace.10729-read(74, "k3sg3Spx2zY7ilKhSoGFPlU5tPaZQeLY"..., 4096) = 4096

strace.10729-read(74, "ftslWZvB1JdxnwQ5hYIiz\nPtGo/KPaHb"..., 4096) = 4096

strace.10729-read(74, "FUhWMXhOp8q3crhkODZ\nc6tsgLjoC2ST"..., 4096) = 4096

strace.10729-read(74, "ZcpuX\n5k8wDgYDVR0PAQH/BAQDAgGGMA"..., 4096) = 4096

strace.10729-read(74, "jVJ0bmBHDOJx+PYZspQ9AhnwC9FwCTyj"..., 4096) = 4096

strace.10729-read(74, "TZWN1cmVUcnVzdCBDQTCCASIwDQYJKoZ"..., 4096) = 4096

strace.10729-read(74, "JtzVHGpxxpp9Hp3dfGzGjGdnSj74cbAZ"..., 4096) = 4096

strace.10729-read(74, "MDMyNTExMDMxMFowWjELMAkGA1UEBhMC"..., 4096) = 4096

strace.10729-read(74, "xkIFRlY2hub2xvZ2llcywgSW5jLjEyMD"..., 4096) = 4096

strace.10729-read(74, "YDVQQKEwxTd2lzc1NpZ24gQUcxHzAdBg"..., 4096) = 4096

strace.10729-read(74, "U3lzdGVtcyBFbnRlcnByaXNlIFNlcnZp"..., 4096) = 4096

strace.10729-read(74, "RZmQnLzf9OxMUP8pI4X8W0jq5Rm+K37D"..., 4096) = 4096

strace.10729-read(74, "BT0peS2cWeqH+riTcFCQP5nRhc4L0c/c"..., 4096) = 4096

strace.10729-read(74, "CAQEABT41XBVwm8nHc2Fv\ncivUwo/yQ1"..., 4096) = 4096

strace.10729-read(74, "--\n-----BEGIN CERTIFICATE-----\nM"..., 4096) = 4096

strace.10729-read(74, "9EfX7mRB\nVXyNWQKV3WKdwrnuWih0hKW"..., 4096) = 4096

strace.10729-read(74, "opvDI5NOFlV2oHB5bc0hH88vLbwZ\n44g"..., 4096) = 4096

strace.10729-read(74, "YyZDDl34bSb+hxnV29qao6pK0xXeXpXI"..., 4096) = 4096

strace.10729-read(74, "9mREruZR\ngyFmxhE+885H7pwoHyXa/6x"..., 4096) = 4096

strace.10729-read(74, "CSEsxEjAQBgNVBAgTCUhvbmcgS29uZzE"..., 4096) = 4096

strace.10729-read(74, "D0x\nCzAJBgNVBAYTAkNOMREwDwYDVQQK"..., 4096) = 4096

strace.10729-read(74, "C+S5DwIDAQABo0IwQDAdBgNVHQ4EFgQU"..., 4096) = 1808

strace.10729-read(74, "", 4096) = 0

strace.10729-close(74) = 0

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729-getpid() = 1370

strace.10729:write(73, "\26\3\1\2\0\1\0\1\374\3\3\v\376g(\2462G+O\255\\H'Na\354\267\214\316\314E"..., 517) = 517

strace.10729:read(73, 0x7fc2688561c3, 5) = -1 EAGAIN (Resource temporarily unavailable)

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLIN}, {fd=71, events=POLLIN}], 2, 0) = 0 (Timeout)

strace.10729-rt_sigaction(SIGPIPE, NULL, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, 8) = 0

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLIN|POLLPRI|POLLRDNORM|POLLRDBAND}], 1, 0) = 0 (Timeout)

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLIN}, {fd=71, events=POLLIN}], 2, 172) = 1 ([{fd=73, revents=POLLIN}])

strace.10729-rt_sigaction(SIGPIPE, NULL, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, 8) = 0

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLIN|POLLPRI|POLLRDNORM|POLLRDBAND}], 1, 0) = 1 ([{fd=73, revents=POLLIN|POLLRDNORM}])

strace.10729:read(73, "\26\3\3\0z", 5) = 5

strace.10729:read(73, "\2\0\0v\3\3\263P V\202X\273OGb\250R\20\355\345)\206\303\220_?\343\222\326M\303"..., 122) = 122

strace.10729:read(73, "\24\3\3\0\1", 5) = 5

strace.10729:read(73, "\1", 1) = 1

strace.10729:read(73, "\27\3\3\t\307", 5) = 5

strace.10729:read(73, "\26a\305PX\267\n\352\vnGt\227\352\337\334C\220\v\277M\n\263\255\255c+?\109}\r"..., 2503) = 2503

strace.10729-stat("/etc/ssl/certs/8e363097.0", 0x7fc24a272430) = -1 ENOENT (No such file or directory)

strace.10729:write(73, "\24\3\3\0\1\1\27\3\3\0E\1M\215\325\273}r\325Y\314\302\4\375\37\177\321\33X}\227\\"..., 80) = 80

strace.10729:write(73, "\27\3\3\0)*5\314\240C\254>\337\355Y9O\362\360\214\341{\260\25\202\316s0i\178\r"..., 46) = 46

strace.10729:write(73, "\27\3\3\0,\355\254\352\215U\33M\323\230,\342S\330WZ\v\30\211\30\343\220J\237P\335\321\2"..., 49) = 49

strace.10729:write(73, "\27\3\3\0\36\335\36\356\257\250B\330\314\317A\346\230\177\374\273\326\350#\372:A\357\362W\365\312\364"..., 35) = 35

strace.10729:write(73, "\27\3\3\0\215\361I\376\256H\260\2423N\345\264<\221\300\224>\377\335\272\315?\212UE\373p\3"..., 146) = 146

strace.10729-poll([{fd=73, events=POLLIN|POLLPRI|POLLRDNORM|POLLRDBAND}], 1, 0) = 0 (Timeout)

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLIN}, {fd=71, events=POLLIN}], 2, 160) = 1 ([{fd=73, revents=POLLIN}])

strace.10729-rt_sigaction(SIGPIPE, NULL, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, 8) = 0

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLIN|POLLPRI|POLLRDNORM|POLLRDBAND}], 1, 0) = 1 ([{fd=73, revents=POLLIN|POLLRDNORM}])

strace.10729:read(73, "\27\3\3\1\335", 5) = 5

strace.10729:read(73, "\366\316\301_\207D\2255\5=\3769\207\2\334Qm\333eA<\357\273\366\370\25t\354!\272&\334"..., 477) = 477

strace.10729:read(73, "\27\3\3\09", 5) = 5

strace.10729:read(73, "\377\237\230~UR\253]\36M\226\233\237*\245\260\3431\23\234\3416K\244\314iH\342\212:P\245"..., 57) = 57

strace.10729:write(73, "\27\3\3\0\32\7\330\301\22\332+\240\33KKZ\205\f\365\"[\233\307~\36\7\276\342)2j", 31) = 31

strace.10729-poll([{fd=73, events=POLLIN|POLLPRI|POLLRDNORM|POLLRDBAND}], 1, 0) = 1 ([{fd=73, revents=POLLIN|POLLRDNORM}])

strace.10729:read(73, "\27\3\3\0\32", 5) = 5

strace.10729:read(73, "\265\213B\2741\25\30g=\266\365\366\375\314\213m\334l[`q+\341A}u", 26) = 26

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLIN}, {fd=71, events=POLLIN}], 2, 159) = 1 ([{fd=73, revents=POLLIN}])

strace.10729-rt_sigaction(SIGPIPE, NULL, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, 8) = 0

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLIN|POLLPRI|POLLRDNORM|POLLRDBAND}], 1, 0) = 1 ([{fd=73, revents=POLLIN|POLLRDNORM}])

strace.10729:read(73, "\27\3\3\5j", 5) = 5

strace.10729:read(73, ".\327\f\f\20\343\327W\30\230\252\352\367K\343\33\374\261\223\371\330~h\f'7\4\244em\363R"..., 1386) = 1386

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLIN}, {fd=71, events=POLLIN}], 2, 95) = 1 ([{fd=73, revents=POLLIN}])

strace.10729-rt_sigaction(SIGPIPE, NULL, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, 8) = 0

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-poll([{fd=73, events=POLLIN|POLLPRI|POLLRDNORM|POLLRDBAND}], 1, 0) = 1 ([{fd=73, revents=POLLIN|POLLRDNORM}])

strace.10729:read(73, "\27\3\3\4(", 5) = 5

strace.10729:read(73, "\242I\2406[\22\266\234\3368K!p\211\341*k\22\377\342\1\224i\330\354\263\361\231\233\"\207\255"..., 1064) = 1064

strace.10729:read(73, "\27\3\3\0\32", 5) = 5

strace.10729:read(73, "\366\250\22\326gM\2771w\262:\20\nV\207\262?\177O\31\252\314yy\301I", 26) = 26

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

strace.10729-rt_sigaction(SIGPIPE, {sa_handler=SIG_IGN, sa_mask=[PIPE], sa_flags=SA_RESTORER|SA_RESTART, sa_restorer=0x7fc27565b730}, NULL, 8) = 0

The useful thing about strace is it can give you the thread ID, not just the process ID, of the thread doing the system calls. This was crucial, since Asterisk itself ran with nearly 100 threads to it. I needed a thread ID to narrow down the issue, and lsof and netstat sure couldn't give me that (after all, in a multithreaded process, any thread can do system calls on a socket... this is why the kernel doesn't give you a way to see what thread "owns" a socket... the question doesn't make any sense).

Asterisk's core show fd command hadn't been much help thus far, but I suspected this could be because it only showed active file descriptors. Maybe Asterisk thought it wasn't actively in use anymore, when it really was. At the same time, in crossreferencing thread 10729, or whatever thread that test had been shown to be responsible for the leaky socket, with core show threads, the thread didn't show up either. So, I modified `astfd.c` and `asterisk.c` in Asterisk to simply not unregister threads or idle file descriptors; in other words, once a thread was registered, it would just stay registered, so this way I could get a list of every thread ever registered in the current Asterisk process, whether it still existed or not. Likewise, I could see what file descriptors had ever been in use.

The way that Asterisk registers threads is that it uses a wrapper function around pthread_create (ast_pthread_create, for example, for joinable threads). The wrapper calls a dummy function in utils.c that actually passes the target function and arguments for the new thread as arguments to an internal function, that first registers the thread, sets up a thread exit handler to unregister the thread, and then calls the function (the internal function is dummy_start, if it matters, so called because it's not the actual desired function to execute). This allows threads spawned this way to be registered with Asterisk.

The file descriptor register is a little bit simpler, though less direct. There's a static array of 1,024 file descriptors in `astfd.c`, and the main Asterisk header file simply aliases all the I/O system calls, such as open, socket, pipe, etc. to functions here that actually do the operation and then update the array entry for the file descriptor 1 or 0, depending on whether it's in use (along with some other metadata). The downside of this, compared to threads, is that file descriptor numbers are not unique over the lifetime of a process. One a file descriptor is closed, it can be reused, and likely will be since the kernel assigns file descriptors starting at the lowest available file descriptor numbers. So, I could see that file descriptor 73, for example, was the leaky one, in strace, but sometimes I wouldn't see it in `core show fd`, and sometimes I would, but I had to conclude that this was simply something else that had been created afterwards that was reusing the same file descriptor number.

The main takeaway from this was that the leaked file descriptors were never getting registered with astfd.c. This meant that it wasn't something in Asterisk itself that was creating the file descriptor, but likely some other library with which Asterisk is linked. The system calls in the strace logs hint as much: you can see that the `read` and `write` calls are a bunch of gibberish, because HTTPS is encrypted TLS traffic. Most likely, these system calls are actually being called from something like OpenSSL, something that does encryption and decryption, and OpenSSL's system calls aren't going to be redirected to `astfd.c` since the OpenSSL code doesn't include the Asterisk header file. It's not even part of the same binary; it just happens to run in the same process since that's how the Asterisk binary is linked.

The thread register changes proved more useful. Being able to track every thread that was created in Asterisk allowed me to trace it to a thread, even one that no longer existed. After another run, I could see that this was the offending thread:

0x7fc24a286700 10729 pbx_thread started at [ 4854] pbx.c ast_pbx_start()

pbx_thread is the function that gets spawned for a channel to execute dialplan in Asterisk. That told me that this was something that a dialplan channel was likely doing, a call to some application or function that was triggering these leaks. One problem persisted: I had been making many calls, trying to do different things that would trigger HTTPS requests, and none of them seemed to do anything. I couldn't manually trigger the problem myself.

It dawned on me that I had probably missed the relevant system calls with strace earlier because I'd been periodically truncating the logs, and thus the actual system calls probably were occuring some time before I was getting notified about the CLOSE_WAIT condition. Certainly, it seemed plausible there was some kind of delay, between when the socket was actually being used, and when it entered that state. Fortunately, strace does have the capability to log timestamps as well, so I enabled them and started over again:

strace -ttffp 16501 -o strace

This time, I finally hit pay dirt. It turned out the system calls were occuring a whole 8 minutes before I was getting notified about CLOSE_WAIT sockets. This meant that by the time I got alerted, the leak had already been set in motion 8 minutes prior.

Fortunately, at this point, it became simple enough to line things up. The timestamps that strace was logging were granular to the millisecond, and I also run Asterisk with the logger configured to log to the millisecond. Now, I could exactly match up the strace logs with the Asterisk logs and pinpoint the exact application call that was triggering the issue:

strace logs:

21:31:33.378918 close(78) = 0

21:31:33.378952 close(77) = 0

21:31:33.378995 socket(AF_INET, SOCK_STREAM, IPPROTO_TCP) = 77

21:31:33.379037 setsockopt(77, SOL_TCP, TCP_NODELAY, [1], 4) = 0

21:31:33.379078 fcntl(77, F_GETFL) = 0x2 (flags O_RDWR)

21:31:33.379106 fcntl(77, F_SETFL, O_RDWR|O_NONBLOCK) = 0

21:31:33.379132 connect(77, {sa_family=AF_INET, sin_port=htons(443), sin_addr=inet_addr("172.67.175.181")}, 16) = -1 EINPROGRESS (Operation now in progress)

Asterisk logs:

[2023-01-12 21:31:33.325] NOTICE[23874]: asterisk.c:428 ast_register_thread: Registered thread 23874

[2023-01-12 21:31:33.327] -- Executing [17778760108@from-callcentric:1] Set("PJSIP/callcentric-00000002", "CDR_PROP(disable)=1") in new stack

[2023-01-12 21:31:33.329] -- Executing [17778760108@from-callcentric:2] Progress("PJSIP/callcentric-00000002", "") in new stack

[2023-01-12 21:31:33.337] > 0x559f41dd1510 -- Strict RTP learning after remote address set to: 199.87.144.74:63552

[2023-01-12 21:31:33.340] -- Executing [17778760108@from-callcentric:3] Set("PJSIP/callcentric-00000002", "pstnsource=CallCentric") in new stack

[2023-01-12 21:31:33.342] -- Executing [17778760108@from-callcentric:4] Verify("PJSIP/callcentric-00000002", "pstn-us") in new stack

[2023-01-12 21:31:33.351] -- Verification result for pstn-us is '30' (SUCCESS)

[2023-01-12 21:31:33.488] -- Executing [17778760108@from-callcentric:5] Set("PJSIP/callcentric-00000002", "__clidverif=30") in new stack

It was now crystal clear that calls to the Verify application in the dialplan were triggering the issue. It took me so long to piece this together because the issue wasn't apparent until 8 minutes later, making it difficult to correlate things that were actually relevant. The timestamps in the logs proved crucial in making this connection.

Sure enough, at this point the hypothesis was easy to test. Commenting out the call to Verify, eight minutes later: no leak. Adding it back and making another call, eight minutes later, there was a CLOSE_WAIT. So, now we had our culprit, and I was a lot closer to the answer. Verify does do a cURL request internally, and there was no question that the HTTPS request it was making was most certainly triggering the issue. However, I'd only been experiencing this issue for the past few weeks, and I hadn't meaningfully touched the code for the app_verify module in months. So, how could this have happened suddenly?

There was always the possibility that perhaps this had been an issue for a while, though, and at this point, the hard work was done. I postulated, before even looking at the code, that perhaps the cURL request was being created, but wasn't getting closed. And indeed, that's exactly what the issue was. I was cleaning up cURL if it failed, but not if it succeeded. Whoops.

So, every single cURL request, I was leaking a file descriptor, through libcurl. Of course, standard ways of debugging this kind of issue would never have suggested as much. Because libcurl doesn't register its file descriptors with Asterisk (or OpenSSL, which cURL uses in this case), Asterisk itself was clueless as to the leak, and only ran into hiccups when the kernel told it no more file descriptors were available.

Now that I finally knew what the issue was, the fix was simple, a one line patch to properly clean up after the cURL request.

Obviously, no postmortem is complete without some lessons learned. Here are some key takeaways from this experience:

- For starters, don't assume the problem is "somebody else's fault". It's usually not, and in this case, it was my own bug. I suspected as much from the outset, which is why I went to the depth I did to figure out what it could be. It seemed very unlikely this was some general bug with Asterisk itself, and it wasn't.

- Don't assume what you can exclude in your reconnaissance. I started off using

tsharkwith a bunch of filters, excluding traffic I didn't think was relevant, like SSH traffic. In the end, I ended up capturing everything, even though that ultimately didn't prove to be helpful. Along the same line of thinking, however, I made the initial mistake of assuming that strace would log what I wanted to see immediately prior to theCLOSE_WAITalerts I was getting. In fact, they were happening a full 8 minutes earlier, which is why I kept missing them at first. Start your process fresh and run strace until there's a confirmed leak. At that point, it's safe to stop strace. - Don't assume when things are happening. A similar point to the previous one, but an issue might appear to happen much later than it actually did. Finding out about an issue doesn't mean that's when the issue happened. Without timestamps, I would have spent much longer debugging this than I did. Timestamps are your friend. Use them.

- Similar to the first point, keep in the back of your mind what tools are supposed to do, and anytime there's a mismatch between what you observe and what the tool says, think critically about it. In this example, there were several mismatches, most notably

stracenot initially showing me any system calls on the offending file descriptor. This was illogical, since we know that that's exactly whatstraceis designed to do. The fact thatstracewasn't showing them doesn't mean they were invisible tostrace. It just meant that I was using the tool wrong at first. Again, usually the problem is not with the tool, it's with its user.My first thought when I set about debugging this entire problem was that perhaps it was an issue with a cURL request in a module I had recently written. Well, it turned out I was half right. It was an issue with a cURL request in a module I had written, just not the one I had written most recently. Go figure.

Log in to leave a comment!